new

improved

fixed

Chat

Prompts

AI

July 29th 2024

Chat Prompt Preview

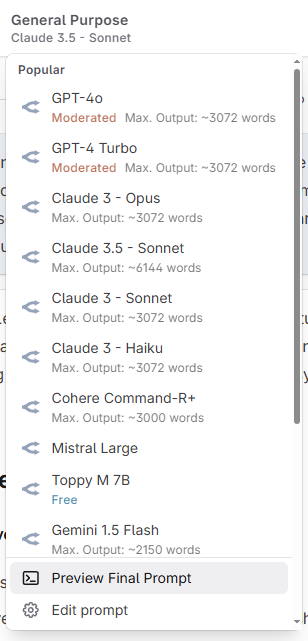

The long awaited chat prompt preview is here! You can now see what gets sent to the AI when entering a question/message. You can access the prompt preview button from the model switcher in the chat thread header (next to "Select AI").

Custom Prompts

For some, the custom prompts can be a bit daunting to look at when unfamiliar with programming languages. So we added support for comments inside your custom prompts! The system default prompts now have a couple to tell you, what each section does.

To wrap text in a comment, wrap it in

{!

and !}

, like so: This is some text {! and this is a comment !}

.That way you also can just disable certain functionality from your prompts, without having to remove it altogether.

- Added support for underscores in numbers, so you can now write 2_000to make it easier on the eyes

- Added support for 'dangling' commas in prompt functions, so it no longer breaks when you write codex.get("name",)

Other Changes

- Fixed a bug where older codex entry versions might have been used for AI generation.In case you got weird output lately, this might have been the cause.

- Nested entries of codex entries directly attached to a scene will now be included as well. This is how it always should've worked, so we count this as a 'bug fix'.

- We now cache the OpenRouter model list so that, in the case there is downtime/are issues, we should have a working copy (fingers crossed!)

- The default scene summarization prompt has been tweaked to not glitch into the scene's POV for writing (it should now always write in third person, present tense.)

- Other various fixes and improvements